Majority of Deepfake Offenders Are Teenagers, Social Media Ads Promote Dangerous Apps

A recent report reveals that 80% of individuals accused of deepfake sexual crimes, which involve the manipulation of images to create explicit content, are teenagers. Alarmingly, 16% of these offenders are under the age of 14, classified as juvenile delinquents who cannot be criminally prosecuted.

On popular social media platforms like Instagram and TikTok, advertisements for deepfake creation apps are prevalent, exposing young users to potential criminal activities without adequate protection. Critics argue that these platforms, which boast millions of users in South Korea, are neglecting their social responsibility by failing to manage harmful content effectively.

According to the National Police Agency, from January to November of this year, there have been 1,094 reported cases of deepfake-related sexual crimes, leading to the arrest of 573 suspects. Among those apprehended, 463 (80.8%) are teenagers, with 94 (16.4%) being juvenile delinquents. The remaining suspects include 87 in their 20s (14.7%), 17 in their 30s (3.0%), and a few in their 40s and 50s.

On average, the police receive nearly seven reports of such incidents daily and plan to continue their crackdown on cyber sexual violence through dedicated investigation teams until the end of March next year.

The situation is exacerbated by the rampant spread of harmful content through social media that teenagers frequently use. There are growing concerns about the vulnerability of young people to deepfake crimes.

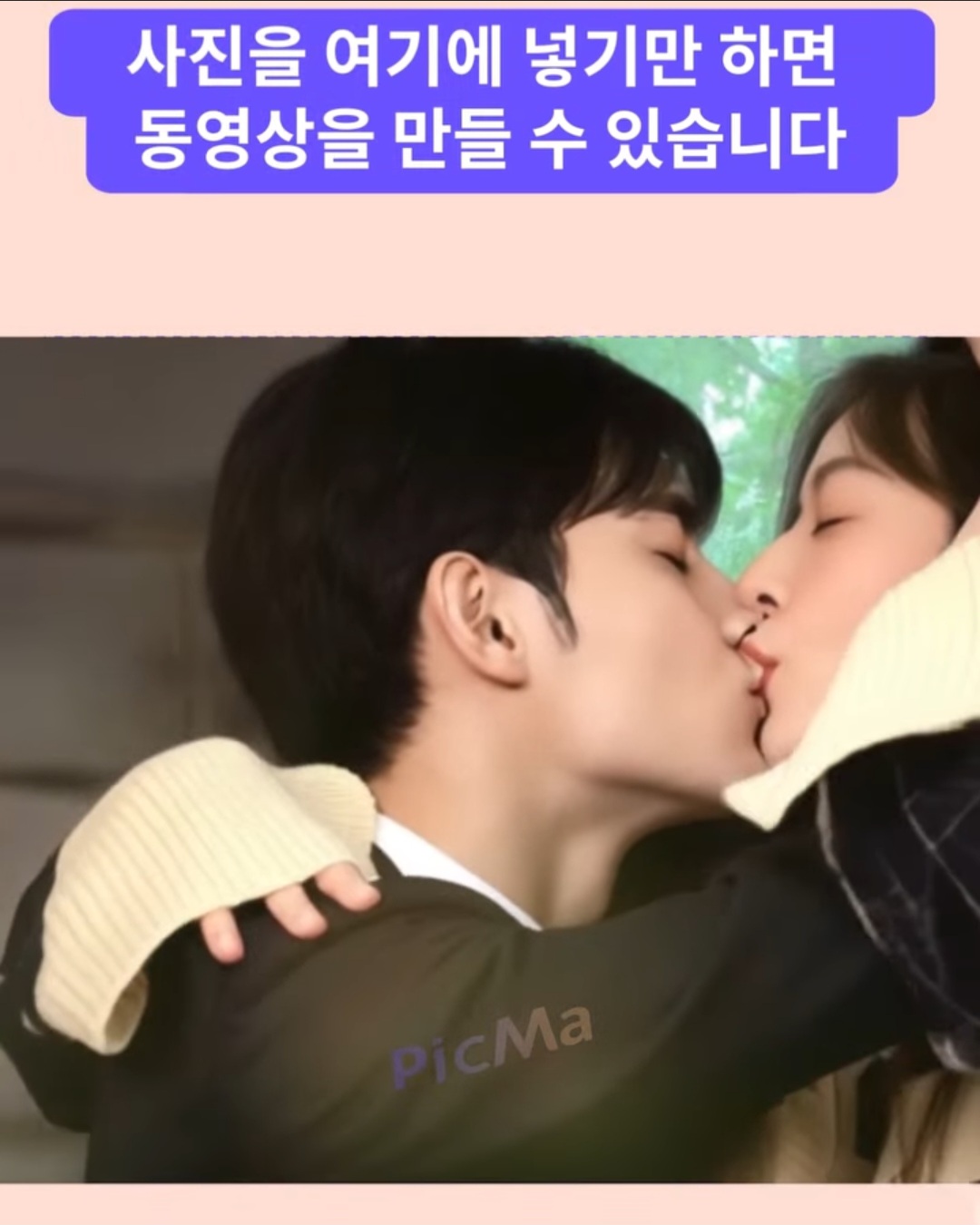

Deepfake apps are openly advertised on major platforms like Instagram, TikTok, and X (formerly Twitter). One advertisement demonstrated how users could create explicit content by simply uploading two photos and clicking a few buttons, enticing viewers with phrases like "Kiss your crush". The ads have garnered comments expressing eagerness to download the apps, indicating a troubling trend of unrestricted exposure to such content.

Despite claims from social media companies that they can block illegal advertisements using AI, deepfake ads continue to slip through the cracks. Companies argue that the sheer volume of ads makes it impossible to monitor each one individually, raising concerns about the effectiveness of their self-regulation.

Additionally, there are numerous ads for prostitution disguised with keywords like 'conditions' and 'meetings'. One recent Instagram ad featured a woman's face alongside a solicitation phrase, targeting men aged 25 and older who speak Korean. Users have reacted with disbelief at the blatant nature of these ads, questioning the standards for what constitutes inappropriate content.

Moreover, deepfake promotional accounts have exploited the blue verification checkmark, which was originally intended to prevent identity theft. Now, for a monthly fee, anyone can obtain this mark, leading to its misuse by illegal advertisers. Recently, an account promoting a site for creating adult deepfake videos was suspended after being reported multiple times.

Critics argue that social media companies prioritize profit over their responsibility to manage illegal and potentially harmful advertisements. X's terms of service state that users may encounter inappropriate content and that the platform cannot monitor or control all content, effectively absolving itself of responsibility. Meta has acknowledged the challenges in reviewing ads, stating that both machines and humans can make mistakes, which has led to accusations of superficial accountability.

Experts emphasize that social media platforms are shirking their social responsibilities. Professor Hwang Seok-jin from Dongguk University’s Graduate School of International Information Security stated that allowing illegal ads to proliferate while profiting from them is tantamount to abandoning their societal obligations.

In a related development, police have identified new instances of deepfake-related extortion targeting public officials. Recently, 44 male local council members across the country received threatening emails containing deepfake sexual content, marking a shift from the previous trend of targeting women. Authorities are now monitoring the potential spread of deepfake crimes against not only ordinary citizens but also prominent politicians and government officials.

What do you think?

0 reactions